In the beginning the universe was created.

The vast majority of programming languages out there come equipped with a comprehensive standard library. What these standard offer depends on the language. In the case of Java, for example, the JDK provides everything from networking to GUI. C++ comes with the Standard Template Library (STL), which offers an incredible variety of generic data structures and algorithms. Regardless of whichever ecosystem, these standard tools are used almmost ubiquitously regardless of their unwieldy sizes, arcane practices, and inaccessible documentation.

This made a lot of people angry and is widely considered a bad move.

Then there is good ole’ C. A step above Assembly, C remains the most ubiquitous systems programming language in the world, although it may at some point be usurped by Rust. Its standard library is notably lacking in comparison to a more popular systems language such as C++. No, seriously– compare every function in C’s standard library to every function in the C++ STL. There’s simply no competition; C comes with no conventional bells and whistles. If you need anything like a growable list such as std::vector or an ArrayList, you’re writing or finding a library that implements a growing buffer.

So how do C programmers get anything done without having any standard containers to hold their bits? They write their own or use a library like glib. Before even getting into the meat of a solution you’ve either added a dependency to your project or invested time rolling your own container. This is just a component and point of consideration when deciding what language to use. I think investing the time in rolling your own, at least once, is quite the educational endeavor. While researching how to implement a Hash Table (for example) from scratch, you’ll need understand hash algorithms. To implement a sorted set, you’ll likely need to immerse yourself in one of many self-balancing binary search trees. Eventually you’ll end up with a trove of snippets and ideas from varying sources, resulting in a toolbox of tricks.

Hashing

Fowler–Noll–Vo

Producing a hash from an arbitrary sequence of bytes is a non-trivial problem. Doing it quickly is even less trivial, especially when trying to produce the fewest amount of collisions possible. Enter the FNV hash algorithms, which offer O(n) complexity with fewer instructions than the majority of rolling hash implementations. The Fowler-Noll-Vo algorithm involves a prime number and integer offset, being 0x100000001b3L and 0xcbf29ce484222325L in the following example. These two magic numbers vary depending on which variant of the hash used, scaling anywhere from 32-bit to 1024-bit versions. This does not produce cryptographic hashes, so their results are not suitable for any purpose beyond hash tables or checksums… but it is very fast.

1 | vl_hash vlHashString(const void* data, vl_memsize_t dataSize){ |

The Value of an Identity Hash

On the topic of hash functions being unsuitable for the purposes of cryptography, consider the idea of an identity hash. An identity hash yields a result that is equivalent to its input. At first glance you might wonder what purpose could this serve– the function just returns what it is given. Look at it this way: There are no two inputs given to these functions that could possibly yield the same output. Numbers are inherently unique in their representations, meaning there are no two ways to represent the same exact integer on the same system. It is for this reason that I often choose to do entirely away with hashing specific number types, but rather just plain byte sequences that are the same length as these types: the idea behind hashing a 32-bit float and a 32-bit integer is the same. A downside to this approach is that the distribution of results is only as good as the distribution of inputs.

1 | vl_hash vlHash8(const void* data, vl_memsize_t s){ |

Combining Hashes

We’ve outlined methods on how to hash variable and well-defined sequences of bytes. What if we’re in a position where we need to combine them? I’ve experienced a need for this when using multiple byte sequences as a key in a Hash Table. There are much more than a few ways to do it, but I will outline two here:

Basic XOR

1

2

3vl_hash vlHashCombine(vl_hash a, vl_hash b){

return a ^ b;

}- Pros:

- It’s pretty darn fast.

- Good for mixing entropy.

- Cons:

- If both inputs are similar, it could make the result less random.

- Pros:

Multiplicative Combination

1

2

3

4

5

6vl_hash vlHashCombine(vl_hash a, vl_hash b){

static const vl_uint64_t prime1 = 0x9E3779B97F4A7C15ULL; // Large 64-bit prime

static const vl_uint64_t prime2 = 0xC2B2AE3D27D4EB4FULL; // Another large 64-bit prime

return (h1 * prime1) + (h2 * prime2);

}- Pros:

- Good for mixing entropy.

- Less prone to collisions than a basic XOR.

- Cons:

- Slightly slower than basic XOR due to higher complexity.

- Magic numbers!

- Pros:

IEEE-754 Floats

Floating point numbers have a format that is arguably less straightforward than something such as an unsigned integer. They more-or-less work by representing a number in scientific notation. While not all systems in the world use this specific floating point standard, it is a safe assumption that the ones you run into certainly will. I will not go over specifics about them here, but the layout under this standard is as follows:

Dicing your Floats

Despite their added complexity, they are just as much a bunch of 1s and 0s as anything else. We can re-interpret a float using bitwise instructions and separate out the individual parts.

1 | void vlFloat32Trisect(vl_float32_t val, vl_uint8_t* sign, vl_uint8_t* exponent, vl_uint32_t* mantissa){ |

Fast Inverse Square Root

While not the fastest in the land as it had once been, the fast inverse square root function is worth noting here. It takes advantage of the underlying bits of the floating point format and is historically significant. Quake 3 owes its (for the time) high-quality and performant reflections to this single function.

1 | float Q_rsqrt( float number ) |

Splitmix64 PRNG

I’m not sure there exists a simpler pseudo-random number generator (PRNG) than Splitmix64. Passing “Big Crush“, this algorithm takes some initial state integer (aka, seed) and pseudo-randomly permutes it. The seed can be any integer; decent ideas for a seed could be the current time or some runtime memory address. It is important to note that this should not be used for any cryptographic purposes.

1 | typedef vl_uint64_t vl_rand; |

Consider the resulting integer as a sequence of bits. We can derive any other integer type from the result by reinterpreting them into other integer types. Casting between a wide and thin integer type (e.g, vl_uint64_t and vl_uint8_t) is technically undefined behavior. By reinterpreting the results of the Splitmix PRNG, we are asking the compiler to make a bitwise copy between the two types.

1 | vl_int8_t vlRandInt8(vl_rand* rand){ |

SIMD-Like Properties Using Primitive Types

By now, you might have come across a theme in this article: everything is just bunch of 1s and 0s. If our random number generator generates 64 bits at a time, shouldn’t we be able to produce two 32-bit integers at once? What about eight 8-bit integers? Four 16-bit integers? The idea of using a single instruction to permute multiple pieces of data is called “single instruction, multiple data” (SIMD).

And we absolutely can, using nothing more than primitive types. We’ll save proper SIMD instructions for another day, it’s a much deeper topic. There shouldn’t be any problem assigning all 64 bits of our state to any memory address assigned to a block of memory that is, itself, at least as wide as the random number state.

1 | //Distribute sizeof(Splitmix State)-many bytes to specified memory address |

Generating Random Floats

We can also apply this same idea to floating point numbers, since we know the IEEE-754 layout. This allows us to not only avoid a costly division when generating random floating point numbers, but even allows us to produce two at a time in almost the same number of instructions.

1 | //bitmask for lower 23 bits |

Memory Alignmment

Believe it or not, every single memory allocation (probably) produces an address that is a multiple of its own width. For example: if a machine uses 8-byte memory addressess, memory is allocated at addresses that are multiples of 8. Memory accesses must be aligned on “natural boundaries“, meaning they must be a multiple of a power of two. Memory alignment has recursive properties for this reason. By defaulting to the largest integer type on the system, which is always going to be a memory address, it is more likely that all number types stored in the memory will also have their addresses aligned to their respective widths. Thankfully, our compilers and systems handle alignment without us having to think too hard about it.

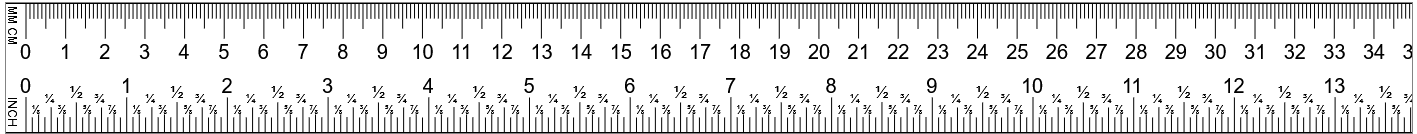

Here’s a visual representation of what I’m talking about. Consider a measuring stick, or ruler.

- Centimeters are guaranteed to be aligned to a multiple of 1/2 centimeter increments

- Centimeters are guaranteed to be aligned to a multiple of 1/4 centimeter increments

… and so on, and so forth. However, consider the opposite:

- 1/2 centimeter increments are NOT always aligned to 1 centimeter increments

- 1/4 centimeter increments are NOT always aligned to 1 centimeter increments

Now lets consider a 64-bit integer as having width of 1 centimeter, 32-bit integers as a 1/2 centimeter, etc. We can say with certainty that the following is true:

- 64-bit integers are always aligned to 16-bit increments

- 64-bit integers are always aligned to 32-bit increments

but…

- 16-bit integers are NOT always aligned to 64-bit increments

- 32-bit integers are NOT always aligned to 64-bit increments

So why is this useful to know if it isn’t my problem?

Well, because at some point it could be your problem. I briefly touched on single instruction, multiple data (SIMD) instructions already, where multiple pieces of data are permuted using a single instruction. These instructions also require alignment, but to a higher multiple than the default system alignment. SIMD truly shines when it operates on aligned data, suffering a performance hit on unaligned operations. If our goal is to operate on 16-byte (128-bit) spans using SIMD instructions, the address of the memory itself is better aligned to 16 byte increments.

Getting it aligned

Memory can be aligned by requesting a slightly larger size when allocating, and computing an offset such that the result address is some multiple of an alignment. Smart implementations might try to stash the origin pointer for later use, rather than managing it separately.

1 |

|

Summary

If it looks like a bunch of 1s and 0s, and it talks like a bunch of 1s and 0s, it’s probably just a bunch of 1s and 0s. I think it is freeing to think about memory in this polymorphic fashion, and it opens the doors to some interesting implementations when the language allows. Combining “less commonly known” aspects of the “most common denominator” computing environment can make for some quite performant code, as seen by the implementation of fast inverse square root. It’s interesting just how many spaces implementing containers can take you.

TL;DR I think this kind of thing is pretty freakin’ neat. I hope some of you out there do too!

Best,

J